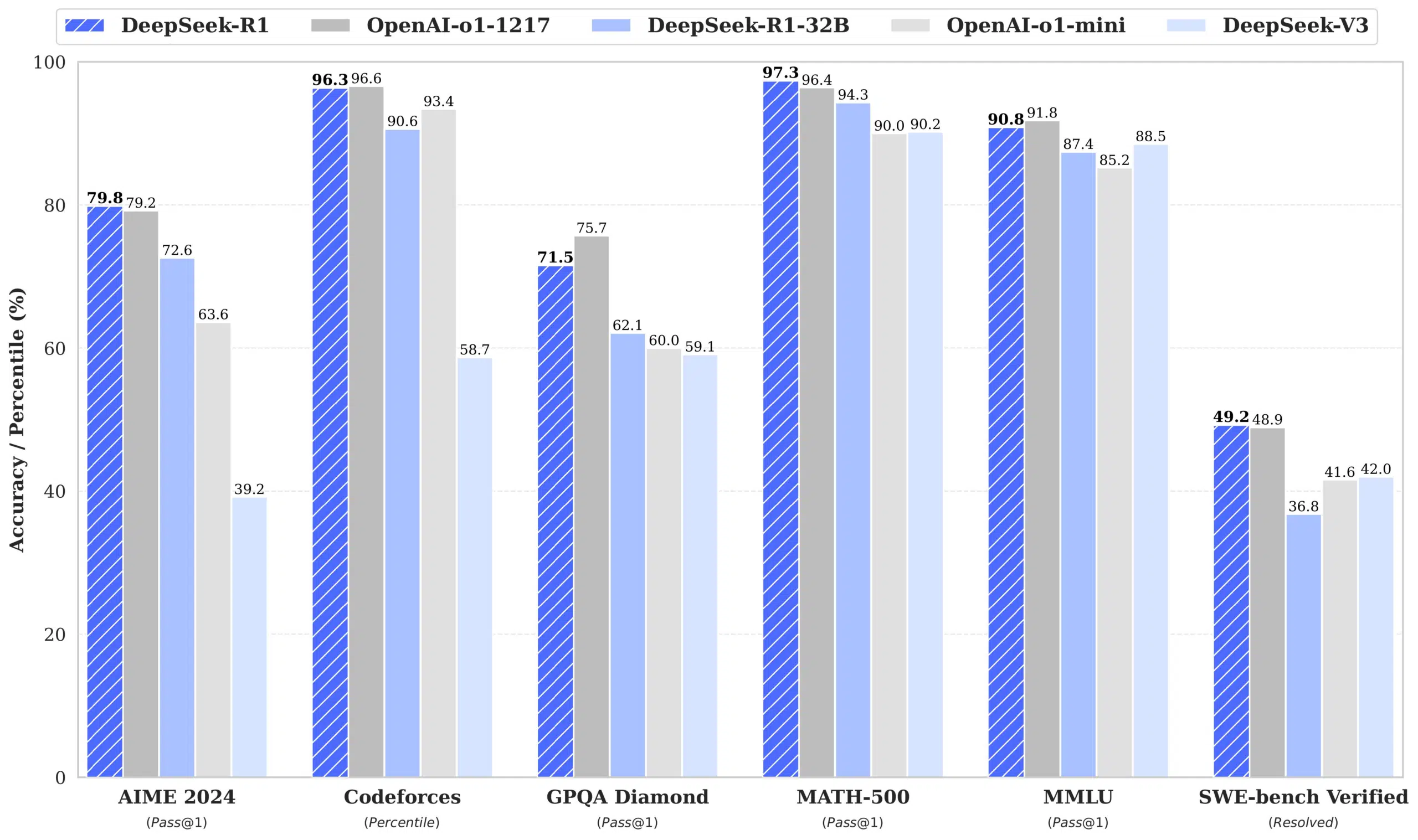

The image presents a bar chart comparing the benchmark results of various deepseek ai and openai models The benchmarks include

aime 2024 mathematical problem solving accuracy

codeforces competitive programming percentile ranking

gpqa diamond general knowledge and reasoning

math 500 advanced mathematical problem solving

mmlu massive multitask language understanding

swe bench verified software engineering and coding accuracy

the results indicate that deepseek r1 performs competitively surpassing openai models in several categories particularly in math 500 and mmlu reflecting its strengths in logical reasoning and technical problem solving